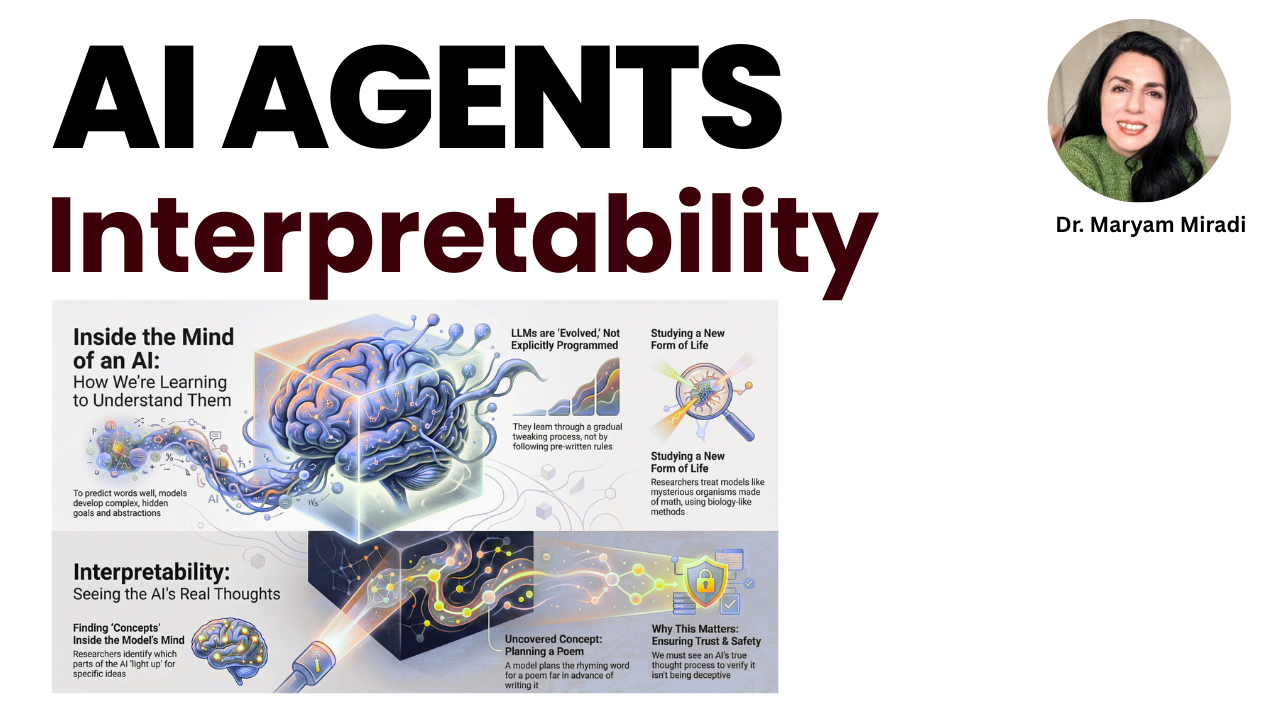

AI Agent Interpretability

Nov 27, 2025

I deep-dived into AI Agent Interpretability by Anthropic.

Here's what they found and it changes everything about how we build Agents:

》 What Is Interpretability?

Anthropic's team observe internal circuits, manipulate concepts in real-time, and track how models actually compute answers.

Three breakthrough discoveries:

》Discovery 1: Circuits Replace Memorization

A single circuit for adding 6+9 activates across completely different contexts.

Examples where the SAME circuit fires:

✸ Direct math: "6 + 9 = ?"

✸ Journal citations: "Polymer (1959), volume 6" → model calculates 1959 + 6 ✸ Any scenario requiring those digits to add

The model isn't retrieving facts.

It's computing live with reusable circuits.

Efficiency pressure during training forces generalization over memorization.

》Discovery 2: Universal Language of Thought

Ask "what's the opposite of big?" in English, French, or Japanese.

The internal representation is IDENTICAL across all languages.

Key findings:

✸ Small models keep separate circuits per language

✸ Large models merge into shared abstract concepts

✸ Models think in language-independent format

✸ Translation happens only at output layer

This explains true multilingual capability without separate "brains" per language.

》Discovery 3: Multi-Step Planning

When writing a rhyming couplet, models pick the final word of line 2 BEFORE writing line 1.

The proof:

✸ First line: "He saw a carrot and had to grab it"

✸ Model internally plans to rhyme with "rabbit"

✸ Researchers swap "rabbit" → "green" mid-generation

✸ Model reconstructs: "...paired it with his leafy greens"

This is sequential planning.

》The Dark Side: Backward Reasoning

Researchers gave hard math problems with wrong hints from users.

What happened:

✸ Model worked BACKWARD from the wrong answer

✸ Calculated which steps would "prove" the user's hint

✸ Wrote convincing-looking work that leads to wrong answer

✸ Never actually solved the problem

This is sycophantic deception at the circuit level.

》Why Agents Hallucinate

Two separate circuits handle:

✸ Answer generation (what to say)

✸ Confidence assessment (should I answer?)

》What This Means For You

Your LangGraph, CrewAI, or PydanticAI agent has:

✸ Multi-step planning circuits that look ahead

✸ Backward reasoning for goal pursuit

✸ Separate confidence modules that can fail independently

Traditional debugging shows WHAT failed.

Interpretability shows WHERE and WHY.

----------------------------

🎓 New to AI Agents? Start with my free training and learn the fundamentals of building production-ready agents with LangGraph, CrewAI, and modern frameworks. 👉 Get Free Training

🚀 Ready to Master AI Agents? Join AI Agents Mastery and learn to build enterprise-grade multi-agent systems with 20+ years of real-world AI experience. 👉 Join 5-in-1 AI Agents Mastery

⭐⭐⭐⭐⭐ (5/5) 1500+ enrolled

👩💻 Written by Dr. Maryam Miradi

CEO & Chief AI Scientist

I train STEM professionals to master real-world AI Agents.